I have been so impressed with perplexity.ai‘s knowledge engine – that, over the past couple of weeks, I watched all the podcasts and interviews with Perplexity CEO Aravind Srinivas and CTO Denis Yarats.

Srinivas and Yarats give a roadmap to building an AI company:

- How their startup functions

- The pivots Perplexity had to take

- The founders’ path, from data scientists PhD students at Berkeley University, to entrepreneurs

- The biography of Aravind Srinivas – his experience at OpenAI, then Google Deep Mind, and Google Brain

- How Perplexity fundraised, and how it got visibility among top industry actors

- Team selection, and attracting top engineering talent

- Not in the least, how the Perplexity technology works

- How Perplexity uses language models, and how it implements its own models running on its own inference infrastructure

- The search index component – how it is built, how it compares with Google’s search index, and how it evolved

- The importance of good scrapers, and of a good content-based page ranker

- Perplexity’s customer acquisition strategy

- How Perplexity compares to Google – and the lessons learned from following closely Google’s early roadmap.

Google was also founded by computer science PhD students – Larry Page and Sergei Brin. Google competed with a number of other web search companies, but followed a different path, being relentlessly focused on attracting top engineering talent – as well as being very customer focused. The monetization strategy, for Google, came pretty late, and almost naturally, once customer adoption at scale was successful.

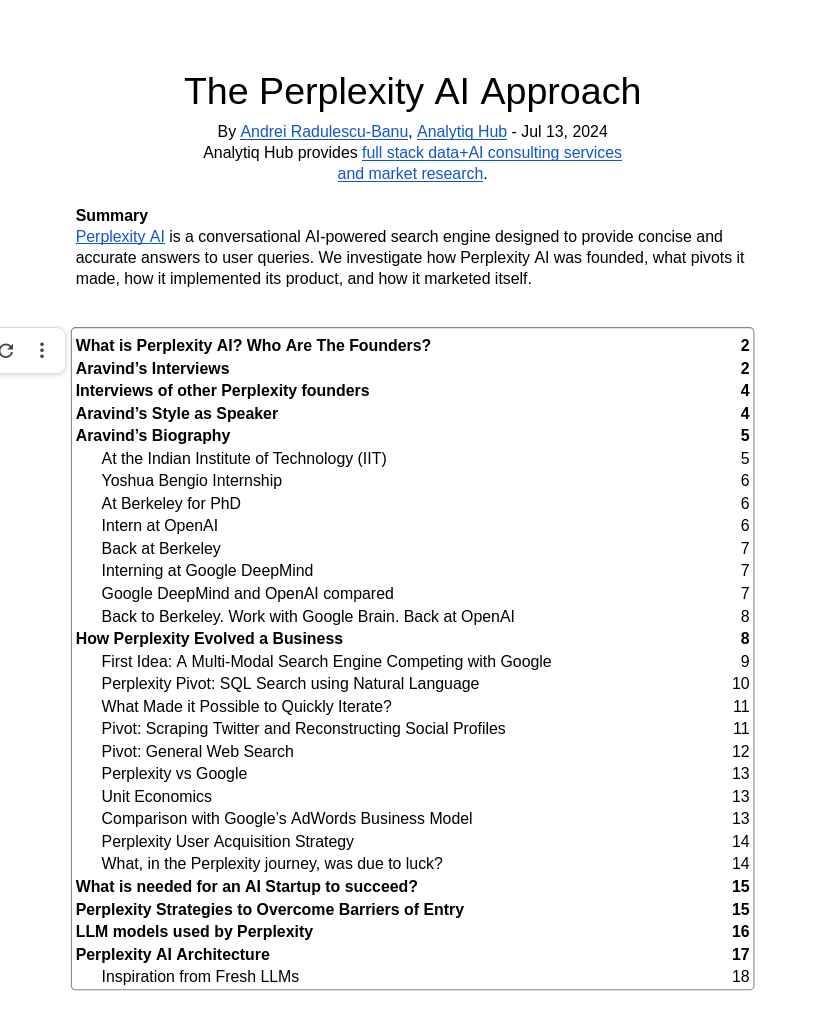

I’ve collected what I learned in The Perplexity AI Approach. Click on the image below to access:

Hiring Language Model Engineers

For illustration, here is how Perplexity approaches Hiring Language Model Engineers:

“…Having seen how OpenAI went about this,” says Srinivas, “if you look at the people who did GPT3, and eventually went on to start Anthropic – all of them are basically from Physics background. The Anthropic CEO, Dario Amodei, is a Physics PhD. And Jared Kaplan is a Physics professor, and he wrote the Scaling Laws paper.”

OpenAI succeeded bringing these people in, Srinivas says, and that paid off well for OpenAI… If you look at the OpenAI engineering team, it is all Dropbox and Stripe people. They were solid infrastructure engineers, doing front end work – and now, AI work.

“It’s always been clear to me that, if you want to work in AI, both research and product, you do not need to already have been in AI”

That was seen clearly with Johnny Ho, the Perplexity co-founder, who was not doing AI previously. He was a competitive programmer, and a Wall St trader. He worked at Quora for a year. He’s as good as anybody at picking new things.

Furthermore, Srinivas says – LLMs are right now better understood by users of LLMs who built stuff with them, rather than by makers of LLMs who understand gradient descent and know how to train a model.

Yarats himself, as an engineer Facebook, up in Menlo Park, noticed the company had a very exclusive culture, where only credentialed researchers with PhDs would do research. Yarats did not find that to be a good thing.

Yarats went on to complete his PhD in Machine Learning, afterwards. But in his experience, having worked at multiple AI companies, “…it turns out that the best research scientists are also very good engineers,” he says.

“Looking at Deep Mind versus OpenAI, the companies who made most progress are companies who also are very good engineers,” Yarats says, pointing out that it was OpenAI, with an open engineering culture, that made the Generative AI discoveries, rather than the more hierarchical DeepMind.

Also as a PhD student – to do good research, Yarats says, it is now necessary to also be a very good engineer, who can quickly set up the infrastructure for your multiple experiments. Lack of engineering skill slows you down. You end up with fewer experiments, and have less of a chance to get to the outlier experiment that was going to turn into your best project.

For this reason, Yarats recommends only to apply for a PhD in Machine Learning after a couple of years working in the industry, when you acquired more engineering skill.

So, what kind of AI engineers should companies look for?

“You want to find people who really want to get into AI, not people who are already good in AI,” Srinivas says. “Every company that has gotten big, has done this in the early days. Google got Jeff Dean, who‘s a compiler and a systems person, and Urs Hölzle, who is an assistant professor. Google told them – hey guys, we have the most interesting computer science problems to solve here, so come work on Search!”

Google did hire a few people for their skill with information retrieval and search. But most of the celebrity Google researchers ended up being non-specialists who wanted to get into that research field for the first time.

For more, check out The Perplexity AI Approach.